Facebook, Fake News, and AI: Yet Another “HUGE” Problem in the Digital Era

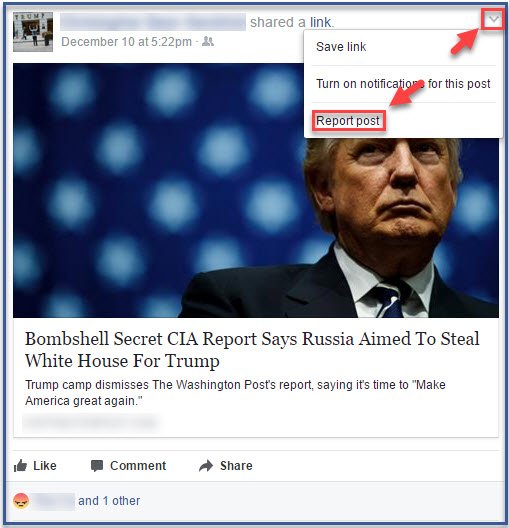

The proliferation of misinformation, or fake news, on social media platforms has become a serious problem. Facebook – largely considered to be the main culprit in this controversy – is leaning heavily on machine learning to fight off this issue.

In late 2016, Mark Zuckerberg, founder and CEO of Facebook, found himself in an unenviable spotlight. The election of Donald Trump set off national outrage, and many credited Trump’s shocking victory to fake news stories on Facebook. Zuckerberg’s response: “Personally, I think the idea that fake news on Facebook ― of which it’s a small amount of content ― influenced the election in any way is a pretty crazy idea.”

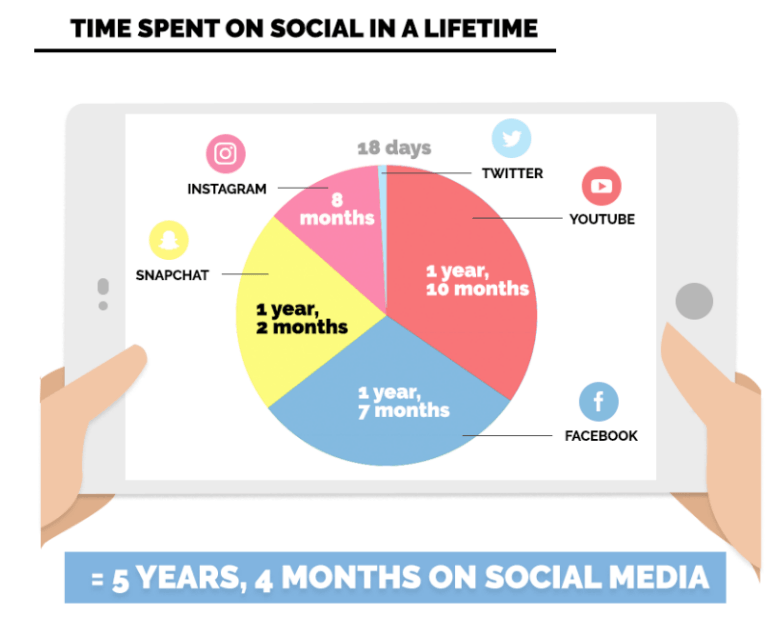

We live in a much more digitally-driven world than we did even a decade ago, in large part due to the advent of social media, and that has had a significant influence on how people consume day-to-day news. The average American spends a total of 5 years and 4 months on social media in a lifetime – 1 year and 7 months of that on Facebook. [1]

Since 2016, Zuckerberg has changed his tone. “Calling that [idea] crazy was dismissive and I regret it,” he wrote in a September 2017 Facebook post. “This is too important an issue to be dismissive.” [2] With 2.3 billion monthly active users, Facebook is the largest social network in the world. [3] Facebook has been a part of many controversies, ranging from data protection issues to various instances of terrorist propaganda, but the proliferation of fake news has attracted a considerable amount negative media attention for Facebook recently.

So how is Facebook addressing these issues today?

“We’re getting rid of the financial incentives for spammers to create fake news, much of which are economically motivated. We stop pages that repeatedly spread false information from buying ads. And we also used AI to prevent fake accounts that generate a lot of the problematic content from ever being created in the first place.” [4] Facebook’s fact-checking project debuted last spring now covers 14 countries and is using machine-learning technology to remove fake news stories that have been duplicated and spread to its users. [5]

But is machine learning enough to stop the spread of fake news?

Yann LeCun, Facebook’s chief AI scientist thinks not: “AI is part of the answer, but only part.” According to LeCun, machine learning software is “fairly effective at blocking extremist videos, flagging potential hate speech and identifying fake accounts. But, when it comes to issues such as fake news, systems still cannot understand enough context to be effective.” [6]

Jim Kleban, a Facebook product manager tasked with fighting misinformation at Facebook, claims, “For the foreseeable future, all these systems will require hybrid solutions.” Machine learning isn’t equipped to evaluate statements for truthfulness yet but can create flags for human fact-checkers. Currently, AI systems skim through millions of shared links to flag suspicious content. Content deemed to be false by fact-checkers is subsequently placed lower in users’ news feeds, which reduces future views of that content by 80%, according to Kleban. [7]

But why is this content not removed altogether?

Facebook COO Sheryl Sandberg explains, “We don’t think we can or should be the arbiters of the truth, but we know people want the truth, and it’s really third parties we rely on to do that.” [8] For example, Facebook has recently partnered with the Associated Press, Factcheck.org and PolitiFact to fight fake news – all with AI-driven approaches. [5]

Facebook has also turned to acquiring AI startups that have already been addressing these issues, including its most recent acquisition of London-based Bloomsbury AI in July 2018. Facebook says Bloomsbury AI “will help us further understand natural language and its applications.” [9]

Forming partnerships and making acquisitions seem to be the most effective strategy for Facebook, especially since this approach can be implemented and turned on quickly, which makes sense given how rapidly fake news has and continues to spread. This approach of looking outward for help should continue to help fight fake news, assuming Facebook continues down this path. However, we know that AI is not the sole solution to this problem and this issue will take time to resolve.

In the meantime, Facebook would benefit from presenting a more accountable and united front. Facebook has done a better job of reacting to instances of fake news but has made less of an effort to be proactive by bringing awareness to the issue, instead seemingly trying to shield it from view. After all, Facebook is not the only victim of fake news – Twitter and Google’s YouTube are facing the same issues – and it might benefit them to form partnerships with these other social media platforms struggling with the same issues.

But the crucial questions remain: If machine learning is only one aspect of the solution, what else can Facebook be doing to address this widespread issue? And how can Facebook better manage expectations until this has been resolved?

(775 words)

Sources:

[1] “How Much Time Do We Spend On Social Media? [Infographic].” Mediakix | Influencer Marketing Agency, 18 Sept. 2018, mediakix.com/2016/12/how-much-time-is-spent-on-social-media-lifetime/#gs.Fjd3dkU.

[2] Zuckerberg, Mark. “Mark Zuckerberg – Facebook Post September 2017.” Facebook, 27 Sept. 2017, www.facebook.com/zuck/posts/10104067130714241?pnref=story.

[3] Facebook, Q3 2018 Earnings Transcript, p.9, accessed November 10, 2018.

[4] Facebook, Q2 2018 Earnings Transcript, p. 5, accessed November 10, 2018.

[5] Vanian, Jonathan. Fortune article: “Facebook Expanding Fact-Checking Project to Combat Fake News.” Business Source Complete, EBSCO, November 11, 2018.

[6] Kahn, Jeremy. “AI Is ‘Part of the Answer’ to Fake News, Facebook Scientist Says.” Bloomberg.com, Bloomberg, 23 May 2018, www.bloomberg.com/news/articles/2018-05-23/ai-part-of-the-answer-to-fake-news-facebook-scientist-says.

[7] Strickland, Eliza. “AI-Human Partnerships Tackle ‘Fake News.” Business Source Complete, EBSCO, November 10, 2018.

[8] Facebook, Morgan Stanley Presentation Transcript, p. 4, accessed November 11, 2018.

[9] Facebook Academics. “Facebook Academics.” Bloomsbury AI Acquisition – Press Release, Facebook, 3 July 2018, www.facebook.com/academics/photos/a.535022156549196/1897623680289030/?type=1&theater.

This is a fascinating topic! As sharing platforms begin to bear responsibility for the content they are used to disseminate, I wonder how this idea of fact checking and filtering might swing too far in the opposite direction – disincentivizing and burying less ‘factual’ media like editorials, opinion papers, and honest (yet uninformed) dialogue. Will there be an inevitable accidental cost to more opinion-based reportage while the filters are calibrated?

I wonder how the AI algorithm deals with stories or articles that stretch the truth, but may not be outright falsehoods. Even humans are often divided on whether an article is intentionally misleading or simply exaggerates a certain perspective. I wonder if, like Watson, Facebook’s algorithm is also able to predict how “certain” it is that the article is truly fake news.

I thought this was a really compelling topic, particularly in how Facebook is using machine learning and AI technology to curb the proliferation of fake news. One question I had was how Facebook balances objectivity when they combine AI and human fact-checkers to review and decide if news sources are legitimate. How will they show impartiality, or judge what news is “fake” when the lines are more blurred? An interesting perspective on this was also raised during Facebook’s Senate Hearings recently, when there were numerous allegations of liberal bias from Republican senators.

This was a really compelling & controversial topic. Since the start of this debate, I have always wondered what role facebook plays in being a pure-play social platform or has it been switching to more of a media outlet, as we see its users also depending on it to expose their newsfeed with the latest news. In this context, I would be concerned on how efficient would machine learning be to quickly spot the totally false articles from the not-completely true/exaggerated truths and how do you then balance that with freedom of speech.

Thank you for picking such a controversial and relevant topic! I really enjoyed reading this, as its a topic with which I have really wrestled. While I want to be of the opinion that Facebook and other social media platforms, as private institutions, are simply platforms for others to communicate their opinions, what we have seen in recent years is that people and groups are too manipulative to be given that freedom. Today, so much information is digested from social media, and it is difficult for even an educated reader to distinguish between what is fact vs fiction and to filter out biases. Facebook and other platforms with their widespread reach hold so much power, in terms of information dissemination. I would argue that this power gives them critical responsibility to be a positive influence on the dissemination of information which could have significant impact on individual, group, national and global livelihood. Though Facebook may not feel that it is their “right” to remove such content, I think it would be incredibly effective if such organizations were able to give articles and other content a “truth score” to help readers sort through and mentally weigh the available information to help them come to reasonable conclusions.