Equipping the Revolution: Hardware Wars in the Age of Machine Learning

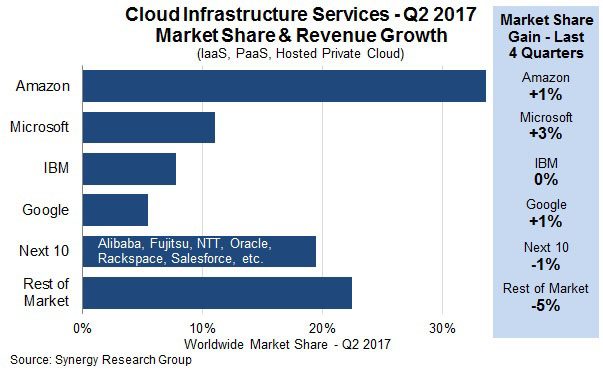

In the machine learning gold rush, Amazon, Google, and Microsoft are competing for cloud computation dominance. Currently second in the market, Microsoft is poised to gain significant market share through its major investment in FPGAs (field-programmable gate arrays) for advanced, deep neural network applications.

From asking a speaker for a cordon bleu recipe to commuting to work in a shared ride, machine learning has infiltrated almost every aspect of daily life. As traffic prediction models, movie recommendation engines, and spam filtering algorithms become more and more pervasive, the hardware required to complete these complex calculations must improve in step. Although many startups look to disrupt this computational hardware space, Amazon, Google, and Microsoft collectively hold over 50% market share and continue to grow every year [1]. Microsoft in particular looks poised to gain significant market share in the coming years with its focus on FPGAs (field-programmable gate arrays) for machine learning applications [2].

Before the amplified focus on machine learning, Amazon, Google, and Microsoft were already competing for cloud services market share. By launching Amazon Web Services (AWS) in 2006, Amazon kicked off a race to acquire developers on their platform as quickly as possible, unveiling the popular S3 (cloud storage) and EC2 (compute capacity) offerings [3]. After enjoying nearly 4 years without significant competition, Google and Microsoft joined the race in 2010 by releasing Google Cloud Platform and Windows Azure Platform respectively [4][5]. Although cloud-based for developers, the introduction of AWS, Google Cloud, and Azure led to increased attention on efficient and optimized hardware to decrease cost and improve customer acquisition.

As these cloud computing platforms grew to supply most of the web, the interest in machine learning grew significantly. In 2004, Kyoung-Su Oh and Keechul Jung first suggested using a specialized type of processing unit, GPUs (graphical processing units), to implement neural network models [6]. At the time, GPUs were used to power computer graphics in video games and computer-generated animations [7]. When the neural network application was discovered, the industry quickly jumped on board and started marketing GPUs for heavy computation as well as graphics. Over the 10 years after Oh and Jung’s paper, adoption of deep learning applications steadily grew and caught the attention of the Big Three. As they often do, AWS moved first and launched the P2 GPU Instance in September 2016, giving developers access to cloud GPUs for running machine learning models [8]. As with the launch of cloud services almost a decade earlier, Microsoft and Google followed suit with the launch of Azure N-Series instances and Google Cloud GPUs [9][10]. By 2017, the race had completely shifted focus from cloud storage and computation for web services to offering best-in-class machine learning services in the cloud.

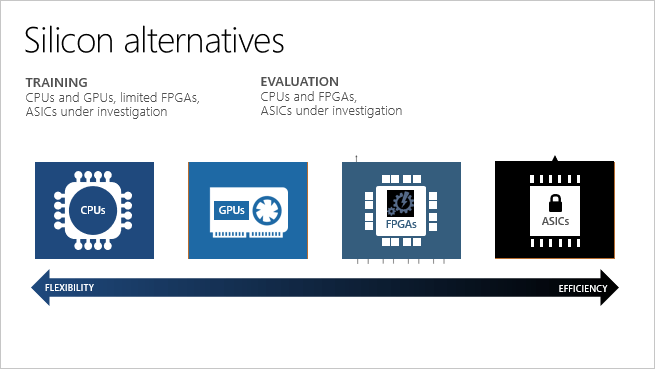

Second in market share behind AWS, Microsoft needed to do something to set themselves apart. Leadership understood that widespread adoption of machine learning, specifically deep learning and neural networks, would lead to an increased need to offer differentiated and customizable product offerings. With cloud GPUs becoming a commodity, Microsoft decided to focus on FPGAs (field-programmable gate arrays) to gain machine learning hardware market share. FPGAs are similar to GPUs in that they can be used for high-speed computation used in machine learning, but different in that they are highly customizable and much more efficient [11].

While developers simply run code on standard GPUs, they are able to program FPGAs to fit the exact model they are running at the time. If something in the ML model changes, the developer can reprogram the FPGA accordingly.

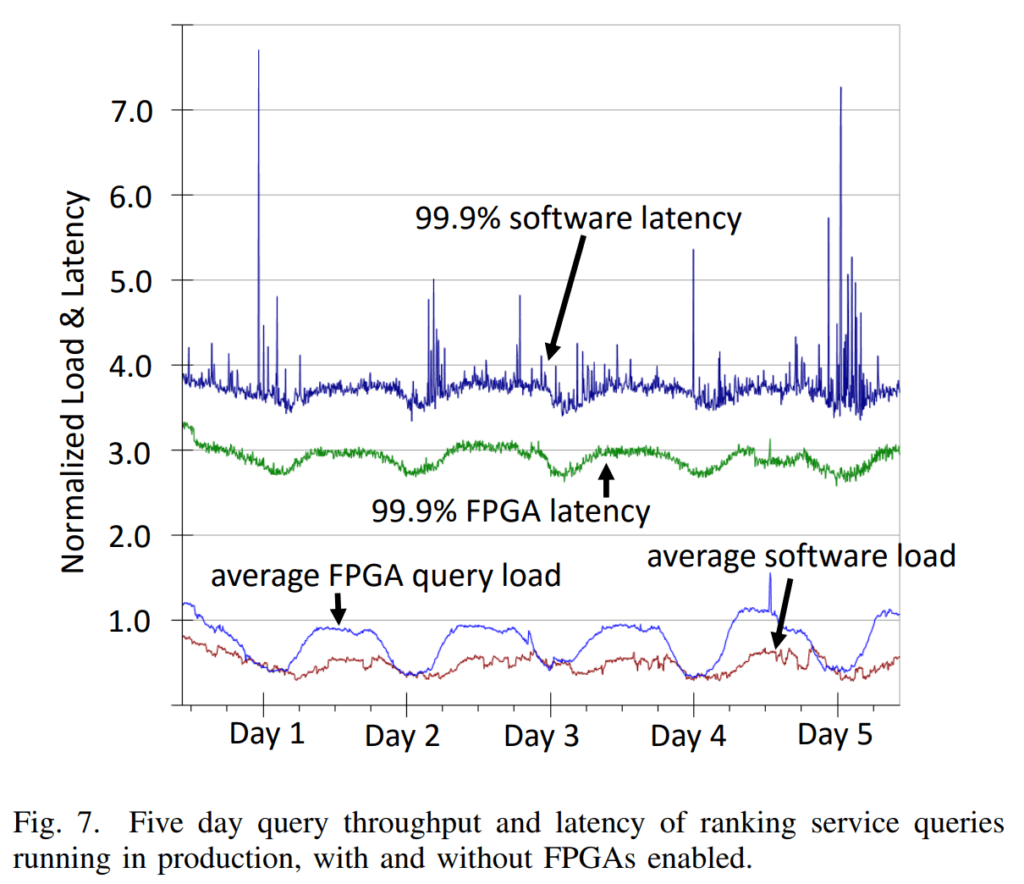

In the short term, Microsoft decided to implement FPGAs in their data centers to improve efficiency and speed of the Bing search engine. This project, codenamed Catapult, showed a 95% decrease in latency time and an increase in throughput while performing searches [12].

The internal research in FPGAs for complex computational tasks immediately led to bottom-line improvements without yet releasing anything to the public. To sustain this hardware competitive advantage, Microsoft decided to align its long-term ML strategy towards improving internal systems with FPGAs while offering FPGA cloud capabilities to third-party developers on Azure. Project Brainwave, the follow-on to Catapult, launched in May 2018 to execute on that long-term plan [13]. Brainwave is the world’s largest investment in cloud FPGAs and allows developers to accelerate the running of machine learning models directly through Azure [14].

Going forward, Microsoft needs to double down on FPGAs for machine learning applications by working with FPGA manufacturers like Intel, Xilinx, and others to keep up with the ever-increasing complexity of deep learning techniques. As deep neural networks continue to evolve, FPGAs could possibly be the most effective hardware choice for ML developers and ensuring Microsoft holds the most advanced hardware is key to market share growth [15][16]. With Amazon rolling out F1 FPGA instances and Google focusing on their Cloud TPUs (tensor processing units) however, will Microsoft be able to keep a competitive advantage by partnering with Intel [17][18]? Does Microsoft have enough marketing power to persuade machine learning developers to adopt FPGAs and learn how to use them?

(798 words)

References

- https://www.cnbc.com/2018/04/27/microsoft-gains-cloud-market-share-in-q1-but-aws-still-dominates.html

- https://www.top500.org/news/microsoft-launches-fpga-powered-machine-learning-for-azure-customers/

- https://aws.amazon.com/about-aws/whats-new/2006/03/13/announcing-amazon-s3—simple-storage-service/

- https://blogs.microsoft.com/blog/2010/02/01/windows-azure-general-availability/

- http://googlecode.blogspot.com/2010/05/google-storage-for-developers-preview.html

- https://doi.org/10.1016/j.patcog.2004.01.013

- https://www.webcitation.org/5msQZJdyo?url=http://www.blacksmith-studios.dk/projects/downloads/bumpmapping_using_cg.php

- https://aws.amazon.com/blogs/aws/new-p2-instance-type-for-amazon-ec2-up-to-16-gpus/

- https://azure.microsoft.com/en-us/blog/azure-n-series-general-availability-on-december-1/

- https://cloud.google.com/blog/products/gcp/cloud-machine-learning-family-grows-with-new-api-editions-and-pricing

- https://www.forbes.com/sites/davidteich/2018/06/15/management-ai-gpu-and-fpga-why-they-are-important-for-artificial-intelligence/#2d73351b7159

- https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/Catapult_ISCA_2014.pdf

- https://www.top500.org/news/microsoft-launches-fpga-powered-machine-learning-for-azure-customers/

- https://docs.microsoft.com/en-us/azure/machine-learning/service/concept-accelerate-with-fpgas

- http://jaewoong.org/pubs/fpga17-next-generation-dnns.pdf

- https://www2.deloitte.com/content/dam/Deloitte/global/Images/infographics/technologymediatelecommunications/gx-deloitte-tmt-2018-nextgen-machine-learning-report.pdf

- https://aws.amazon.com/blogs/aws/developer-preview-ec2-instances-f1-with-programmable-hardware/

- https://cloud.google.com/blog/products/gcp/cloud-tpu-machine-learning-accelerators-now-available-in-beta

I’m curious to understand if the same resistances to change are present in this industry as in many organizations and industries around the world. Your article makes it seem as if Microsoft has a clear first-mover advantage in developing what is clearly a better product than what is offered in the status quo. The challenges then seem to be 1) maintaining that head-start and 2) making the use of the FPGAs the new normal for the machine learning developers. Are there key stakeholders that Microsoft needs to engage with? Are there obvious customers, or influential users that could usher in an accelerated adoption of the new technology?