Emotionally Aware Vehicles: The Future of Road Safety?

How Emotion Artificial Intelligence can be used to monitor drivers and increase road safety

The Road Safety Problem

The global statistics on road traffic crashes are unsettling. According to the World Health Organization (WHO), road traffic crashes are responsible for 1.25 million deaths and between 20 and 50 million non-fatal injuries every year[i]. If left unchecked, road traffic accidents are forecasted to become the seventh-leading cause of death by 2030[ii]. Among the leading causes of fatal road accidents are alcohol-impairment, drowsiness and distracted driving, which were responsible for 10,497, 3,450 and 803 fatalities respectively in the United States in 2016.[iii] Using machine learning technologies, Affectiva, an emotion artificial intelligence company, seeks to enhance driver safety in collaboration with car manufacturers to address these primary causes of road accidents.

Machine Learning at Affectiva

Founded in 2009 in Cambridge, Massachusetts, Affectiva is a developer of emotion-sensing artificial intelligence software through the analysis of human facial expressions and physiological responses. Its products have been used in a variety of applications including market research, advertisement assessment, customer service robots, augmented reality system for autism. The company is now actively exploring Automotive Artificial intelligence to monitor drivers and enhance road safety.

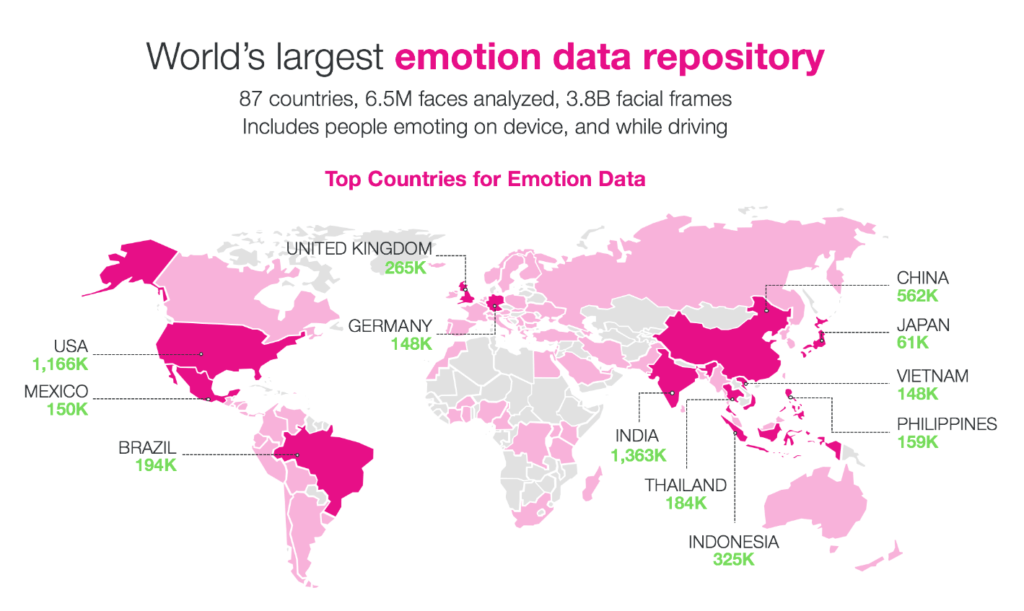

Machine learning is at the very core of Affectiva’s business model and is the basis of its product development. The company has developed an emotion data repository through the analysis of over 6 million faces from 87 countries. This data is used to train its deep learning (a branch of machine learning) algorithms to detect various emotion metrics such as contempt, anger, fear, joy, sadness and surprise.[iv]

Given the breadth of potential applications within the emotion artificial intelligence space, Affectiva can continue to leverage its emotion data repository as it expands and explores various opportunities. The continuous improvement of its algorithms and data repository will be key to its long-term success.

Automotive Artificial Intelligence – How will it work?

Using cameras installed in a car, Affectiva Automotive AI will monitor a driver’s facial expressions in real time to determine a driver’s cognitive and emotional state, leveraging a subset of its emotion data repository for automotive use cases. In addition, the system will detect and analyze voice activity in the vehicle[v]. The data collected will be provided to auto manufacturers, enabling them to build advanced monitoring systems to take appropriate action, if unsafe conditions are detected. Automakers have begun installing cameras inside some new car models that track the movement of the driver’s head and eyes. The system, which is already being used in at least one General Motors car, will become standard equipment on many European cars in a couple of years.[vi]

Exhibit 1. Source: Affectiva

Management Strategy

Affectiva’s management has established strategic partnerships with other AI industry leaders such as Nuance Communications Inc, a developer of speech-recognition systems used in an estimated 200 million cars worldwide.[1] This partnership enabled the voice detection capabilities for the Automotive AI product. The integration with Nuance will begin to establish Affectiva in the automotive industry and deliver the industry’s first interactive automotive assistant that understands drivers’ and passengers’ complex cognitive and emotional states from face and voice and adapts behavior accordingly[vii]. Long-term, the company is carefully considering the ethical implications of Automotive AI by avoiding non-consensual data and evaluating ways to avoid introducing bias into their algorithms[viii], which is a primary concern in this space.

Recommendations

Based on the amount of data Affectiva has gathered in its emotion data repository for its automotive use cases (See Exhibit 1), I would recommend that the company targets its Automotive AI product development to the United States market in the short term. Given Affectiva’s lack of experience in the automotive industry, the company should focus on working with select manufacturers in the US to extensively test its technology, gather data and continue to refine its algorithms and establish itself in the US before attempting to expand into other markets.

[i] World Health Organization. (2018). Road Traffic Injuries, http://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries

[ii] World Health Organization. (2018). Road Traffic Injuries, http://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries

[iii] National Highway Traffic Safety Administration (2017) US DOT Releases Fatal Traffic Crash Data. https://www.nhtsa.gov/press-releases/usdot-releases-2016-fatal-traffic-crash-data

[iv] Affectiva (2018), http://go.affectiva.com/auto

[v] Affectiva (2018), http://go.affectiva.com/auto

[vi] Bray, Hiawatha. (2018, September) Back seat driver? New car cams may soon sense driver fatigue, texting, other distractions. https://www.bostonglobe.com/business/2018/09/27/back-seat-driver-new-car-cams-may-soon-sense-driver-fatigue-texting-other-distractions/z7qpS02xcjA49xMvgqeyhP/story.html

[vii] Affectiva and Nuance to Bring Emotional Intelligence to AI-Powered Automotive Assistants (2018) https://www.marketwatch.com/press-release/affectiva-and-nuance-to-bring-emotional-intelligence-to-ai-powered-automotive-assistants-2018-09-06

[vii] Press, Gil (2017, June) Emerging Artificial Intelligence (AI) Leaders: Rana el Kaliouby, Affectiva. https://www.forbes.com/sites/gilpress/2017/06/12/emerging-artificial-intelligence-ai-leaders-rana-el-kaliouby-affectiva/#67cb0d613d46

You recommend that the Affectiva focus on US manufacturers in the short-term, but I wonder if the company should consider a couple of other avenues. First, it could focus on state or federal governments to try to make this type of intelligence standard practice in cars by law. The benefits are obvious – the technology could mitigate one of the leading causes of non-natural deaths in the world. Second, Europe could make more sense as a target market. It is more receptive to legislation / regulation in general, and as you noted in your post, the technology is poised to become “standard practice” in Europe.

Really interesting article! As I read, I consider all of the possible uses for this technology. Could Affectiva detect when a driver is under the influence? If so, could Affectiva have the ability to prevent the car from starting? I see a lot of approaches that this company could take to increase road safety. However, I do think that people would be reluctant to have a emotion-reading sensor in their car. And, if this becomes a legal requirement, the population may be skeptical of “big brother.” While I think that there will be human drivers on the road for quite some time, cars will become more and more autonomous. If humans are no longer driving cars, this technology may become obsolete quickly. Nevertheless, advances in road safety should be aggressively pursued and I’m glad Affectiva is putting their technology to good use.

As you note, alcohol impairment, drowsiness, and distracted driving are responsible for a significant number of fatalities in the US and beyond. In fact, approximately 90% of motor vehicle crashes can be at least in part attributed to human error [1].

I agree that a significant percentage of these instances can be mitigated or avoided by the Automative AI that Affectiva is developing. I do also believe that there are multiple hurdles that this company faces including public perception of privacy violations and the impending threat of autonomous vehicle technology.

Another concern I have is the potential for false positive diagnosis of driver misbehavior. If the technology incorrectly identifies unsafe driving procedures, this could be met with annoyance by the drivers and the auto companies that are making these ultimate monitoring decisions. Because the burden likely falls on companies like GM or Ford, they might be hesitant to adopt this technology.

[1] Smith, Bryant Walker. (2013, December 18). Human Error as a Cause of Vehicle Crashes. Retrieved from http://cyberlaw.stanford.edu/blog/2013/12/human-error-cause-vehicle-crashes

Reading this raised a couple of interesting questions for me like, if a vehicle is emotionally aware of it’s driver, what type of interventions will this trigger? I imagine a scale of increasing invasiveness. On the low end it could simply be an automatic response from the car to raise awareness to the driver, like when your car beeps when you start driving and are not buckled. On the high end the car could simply turn off if it registers that it’s driver is alcohol-impaired above some threshhold. The further up this scale you are I think the more critical it will be to ensure that the algorithms are accurately assessing the drivers.

I think your point at the end to focus the rollout in the US to develop it’s algorithm first before expanding was a good one. There probably is a product development tradeoff between expansion vs focusing. On the one hand, geographical expansion will give you access to a much larger dataset, which is needed for these algorithms to develop statistically significant insights. On the other hand focusing in the US will ensure the quality of the data is more uniform. If you aggregate emotion data from across the globe it may actually make your predictions worse. Expressing anger in one country may look very different in a different country because of the way those emotions are conveyed. Or the baseline intensity for these emotions may be different in different cultures.

As I think about the future of this technology, the immediate concern that comes to mind is what can it optimally and productively be utilized for beyond automobiles. Given the rise of autonomous vehicles, I do think auto crashes are an important issue to tackle, but hopefully one that will significantly diminish over time with the rise of driverless cars. I wonder if Affectiva should instead focus its attention and resources to other human-operated processes that seem less likely to be automated in the medium-term. Two that come to mind are airplane pilot operations as well as human-operated machinery. I also think the concern regarding non-consensual data as well as biases is extremely important. Although there is a lot of good that can come by preventing humans from harming themselves or others when in a sub-optimal state, there is inherent danger that comes with labeling facial expressions. Given the enormous range in cultural differences to express varying emotion, as well as obvious differences in facial composition across the globe, I worry that those with ill intent can exploit this technology for racial or professional discrimination and wonder what Affectiva is doing to prevent this and if it’s enough.

I’d be very curious to see other potential applications of the emotional identification ability. For example, could this be applied in care-taking situations such as hospitals or child-rearing? If so, is there a way to leverage this technology to scale these services to address skills shortages in developing nations / underserved markets?